In my last post [read it here], we talked about two evaluation methods: audience tracking and surveys. Both of these require some thinking on the front end, before starting the evaluation, but very little input from the program facilitator during the actual program. Today, we’ll look at two evaluation methods that take more work to facilitate.

Skills or Knowledge Test

Informally, this is a pretty common evaluation method. You probably already ask your participants to answer a question based on information they’ve just learned, or to show off a new skill. If your program is based on skill-building or knowledge-learning, this is the most logical way to evaluate your effectiveness. A survey might tell you whether participants think they’ve learned something — but it doesn’t tell you if they actually have. For more reliable results, you’ll have to get them to prove it.

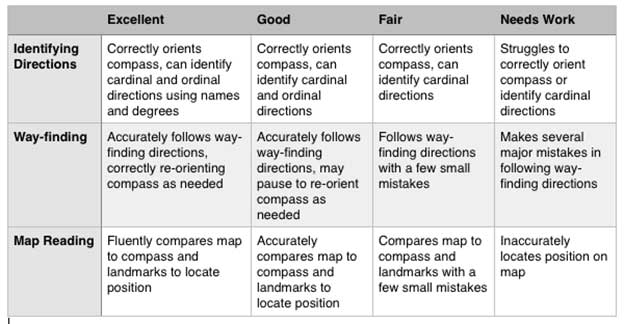

To record the results of this evaluation, you have a few choices. You can keep a written record on your own — for example, tallying up the number of participants who are able to find directions using a compass or answer a question correctly. Or you can ask participants to complete a brief test at the end of the program. (This is most effective as an evaluation tool when paired with an identical pre-test taken at the beginning of the program, so you can see what participants learned versus what they already knew.) You can also use a rubric to evaluate different levels of understanding.

A sample rubric for a skills test. In the skills test, participants use a compass and map to follow directions and identify their ending position on the map.

Observation

Observation, like a test, directly evaluates the participant’s experience. In programs that emphasize “soft skills” like teamwork, observation often makes more sense than a skills or knowledge test.

This method is the least intrusive for the participants, but the most complicated for the evaluator to do well. Observation can be very subjective — to counteract this, observers need to look for very specific things based on what you’re trying to evaluate. Do you want to measure participant engagement in a science experiment? Observers should look for on-topic conversations between participants and participants asking or answering questions to the facilitator. Do you want to measure enjoyment? Look for smiles and laughter. Do you want to evaluate whether your instruction is clear? Count the number of times someone has to ask a clarifying question or expresses confusion.

This takes some real thinking on the front end to decide what outward, observable signs are reliable indicators of what you’re evaluating. Then, you need to make sure that all observers are doing the same thing. If you have a small number of observers, you can have a conversation about how to conduct the observation. If you have a larger number of observers, write up a very detailed checklist for them.

This observation checklist was given to summer camp counselors who would be watching their campers participate in a new program. Counselors were watching for signs of engagement and scientific thinking. The objective check-boxes (No Groups, Some Groups, All Groups) allowed counselors to complete the observation with little to no front-end training.

Let’s Get Going!

The four methods we’ve discussed — audience tracking, surveys, skill/knowledge tests, and observation — all have very different requirements of the evaluator and very different resulting data. The most effective evaluation will include at least two of these methods. If you’re evaluating a brand-new program that’s still in development, you may even use three or four different methods. (For an existing program, more than two methods can be a waste of resources.) The goals of your evaluation will inform your choice of methods.

But most importantly, you should be using at least one of these methods for every one of your programs. You need to know what you’re doing well and what you can improve upon, and it’s helpful to have positive evaluation feedback to use the next time you need to apply for a grant or make a presentation to your organization’s board. Continually using at least one evaluation technique will give you a wide range of feedback to draw on.

Happy evaluating!